In ECET 2026 CSE, Big Data topics like Hadoop and HDFS are crucial. HDFS is the storage backbone of Hadoop and exam questions often cover block size, replication, architecture, and advantages.

📘 Concept Notes

🌐 What is HDFS?

- HDFS = Hadoop Distributed File System.

- Designed to store and manage very large files across clusters of commodity hardware.

- Splits files into blocks and distributes them across multiple machines.

- Provides fault tolerance using replication.

⚙️ Key Features of HDFS

- Block Storage:

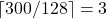

- File divided into blocks of default size

.

.

- File divided into blocks of default size

- Replication:

- Each block is stored multiple times (default replication factor

).

). - Ensures reliability even during node failures.

- Each block is stored multiple times (default replication factor

- Master–Slave Architecture:

- NameNode (Master): Stores metadata (directory structure, block info).

- DataNode (Slave): Stores actual blocks of data.

- Write Once, Read Many:

- Files are immutable after writing.

- Provides high throughput access.

🔋 Formula – Total Storage Requirement in HDFS

If:

- File size =

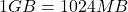

- Block size =

- Replication factor =

Then total storage required is:

![]()

📐 Example

- File size =

- Block size =

- Replication factor =

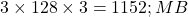

Number of blocks = ![]()

Storage required = ![]()

🛠 Applications of HDFS

- Storage of huge datasets (GB–TB).

- Fault-tolerant data storage.

- Batch processing systems (MapReduce, Spark).

- Data transfer between distributed clusters.

- Basis for Big Data analytics frameworks.

🔟 10 Expected MCQs – ECET 2026

Q1. HDFS stands for:

A) Hadoop Distributed File Storage

B) Hadoop Distributed File System

C) High Data File System

D) Hadoop Data Framework Storage

Q2. Default block size in HDFS is:

A) 32 MB

B) 64 MB

C) 128 MB

D) 256 MB

Q3. Default replication factor in HDFS is:

A) 1

B) 2

C) 3

D) 4

Q4. The component storing metadata in HDFS is:

A) DataNode

B) NameNode

C) Secondary DataNode

D) TaskTracker

Q5. If file size = ![]() , block size =

, block size = ![]() , replication factor = 3 → number of blocks = ?

, replication factor = 3 → number of blocks = ?

A) 6

B) 8

C) 12

D) 24

Q6. In HDFS, actual data is stored in:

A) NameNode

B) DataNode

C) ResourceManager

D) JobTracker

Q7. Which is TRUE about HDFS?

A) Files can be updated anytime

B) Write once, read many

C) Does not support replication

D) Blocks are variable sized

Q8. Secondary NameNode is used for:

A) Storing data

B) Backing up metadata

C) Running tasks

D) Client communication

Q9. If file size = ![]() , block size =

, block size = ![]() , replication factor = 3 → storage required = ?

, replication factor = 3 → storage required = ?

A) 900 MB

B) 1152 MB

C) 128 MB

D) 600 MB

Q10. Which is NOT an advantage of HDFS?

A) Fault tolerance

B) Scalability

C) Optimized for large files

D) Real-time low-latency processing

✅ Answer Key

| Q.No | Answer |

|---|---|

| Q1 | B |

| Q2 | C |

| Q3 | C |

| Q4 | B |

| Q5 | B |

| Q6 | B |

| Q7 | B |

| Q8 | B |

| Q9 | B |

| Q10 | D |

🧠 Explanations

- Q1 → B: HDFS = Hadoop Distributed File System.

- Q2 → C: Default = 128 MB.

- Q3 → C: Default replication factor = 3.

- Q4 → B: NameNode stores metadata.

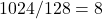

- Q5 → B:

;

;  blocks.

blocks. - Q6 → B: Data stored in DataNodes.

- Q7 → B: Files are write-once, read-many.

- Q8 → B: Secondary NameNode manages backup of metadata.

- Q9 → B:

; storage =

; storage =  .

. - Q10 → D: HDFS is not suitable for real-time low-latency.

🎯 Why Practice Matters

- HDFS questions are direct and formula-based in ECET.

- Easy marks can be scored by remembering block size, replication factor, and formula for storage requirement.